Innovation in assessment standard setting

ACER news 19 Apr 2022 7 minute readAn online platform developed by the Australian Council for Educational Research (ACER) to support professional bodies in setting assessment performance standards is one of four finalists in the 2022 e-Assessment Awards ‘Most Innovative Use of Technology in Assessment’ category.

Standard setting is a process of systematically determining the score on an assessment that represents the minimum acceptable level of performance – known as the ‘cut score’.

ACER facilitates standard setting in numerous high- and low-stakes assessment contexts, with a particular focus on medical specialty colleges.

This process begins with training panellists with content expertise on the theory, practice and rationale for standard setting. Panellists then individually review assessment questions and provide ratings of the score they would expect from a minimally competent candidate for each question. The rating data is analysed in accordance with a standard setting methodology, and then cut scores are finalised through dedicated review meetings involving deliberation and consensus-building.

When standard setting was being conducted in face-to-face workshops, panellists would record their ratings for assessment questions on paper, or in spreadsheets, and then upload these to ACER for data aggregation through file share systems. But the move to online standard setting in the wake of the COVID-19 pandemic created data security risks to this approach. Spreadsheets with sensitive exam content could potentially be accessed in non-secure environments and material may have been inadvertently saved to local computers as part of the process. In addition, panellists could miss recording data or record invalid data. This called for a secure, robust and user-friendly process for collecting panellist ratings to conduct standard setting.

The standard setting process demanded a data collection, validation and monitoring solution that could handle multiple exam formats and diverse contexts. For example, some medical exams have anatomical images and other high-resolution image types. There are also Extended-Response (ER) item formats, with associated scoring guides where the question and rubric need to be seen together. The scoring guides themselves often have rubrics in a variety of formats (such as numeric, analytical, or holistic) and are heavily context- and client-dependent. Due to these complex requirements, which could not be met by existing solutions, ACER set out to develop a bespoke online platform.

The platform is underpinned by a continuous integration, development, and deployment (CI/CD) pipeline that incorporates a fully automated software test suite. This ensures each new feature works seamlessly with previously developed features. Prototypes for features are written in production-ready code and in the latest web-stack technologies. This software development methodology means new features can be deployed within days, and that the software can be improved and upgraded in response to feedback, without interruption to clients and users.

The first pilot successfully set standards using the Yes/No Angoff method for an MCQ exam for a medical specialist college. The platform was then upgraded to be able to handle the Percentage Angoff and Extended Response Angoff methods.

Feedback from panellists and content managers was sought and resulted in new features, including live dashboards and data displays, necessary to monitor the progress of panellists and facilitate consensus-building discussions that are an integral part of standard setting.

The combination of a continuous integration, development, and deployment pipeline, the latest web-stack technologies, and iterating on the product delivered to users within days, ensured that the delivered product provided a quality standard setting experience.

A second trial was carried out with Anglophone and Francophone participants from six African countries for an international study, Monitoring Impacts on Learning Outcomes. The platform needed to have translation management features to ensure the interface could be presented in French, as well as mobile phone compatibility due to accessibility needs in the local context. This trial also motivated the need for features to be able to add, update, or remove participants during a standard setting study.

Following the success of the online platform during the pilot and trial phases, ACER has developed new features to facilitate the Bookmark standard setting method and Pairwise comparison. Due to the platform's ease of use, it is also being used for other rating activities in low-stakes environments, such as assessment training workshops, as well as data collection activities that do not require standard setting.

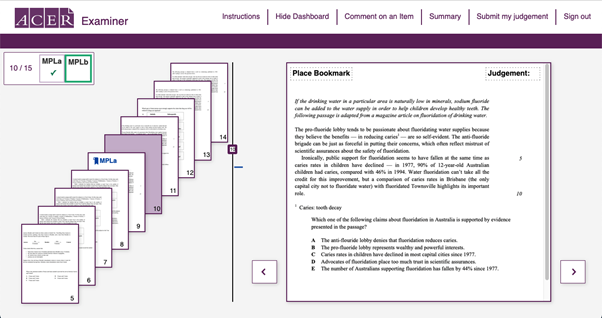

Below: The Hybrid Bookmark and Angoff Yes/No standard setting interface. Image ©ACER.

The announcement of ACER’s standard setting Signum platform as a finalist in the 'Most Innovative Use of Technology in Assessment’ category of the 2022 e-Assessment Awards is welcome recognition of the platform’s extensive and useable standard setting features that improve the reliability and validity of assessment.

The e-Assessment Awards are organised by the e-Assessment Association, an international not-for-profit membership organisation representing all industry sectors with an interest in e-Assessment. The winners of the 2022 e-Assessment Awards will be announced on 21 June.

Find out more:

Contact ACER’s specialist and professional assessment review team to discuss how ACER can support your assessment practices.